Adding l2 regularization on multi-layer or complex neural networks can avoid over-fitting problem. Is there some easy ways to add l2 regularization in tensorflow? In this tutorial, we will discuss this topic.

What is l2 regularization?

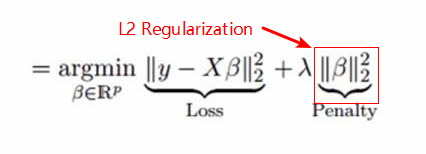

L2 regularization is often used as a penalty for loss function, which is to avoid over-fitting problem when we are training a model.

where λ = 0.001 or 0.01 et al.

L2 regularization is also often called to ridge regression. We should use all weights in model for l2 regularization.

How to add l2 regularization for multi-layer neural Networks?

To use l2 regularization for neural networks, the first thing is to determine all weights. We only need to use all weights in nerual networks for l2 regularization.

Although we also can use dropout to avoid over-fitting problem, we do not recommend you to use it. Because you will have to add l2 regularization for your cutomized weights if you have created some customized neural layers.

How to get all trainable weights in a complex nerual networks?

In tensorflow, we can list all trainable variables easily, we do not need all untrainable variables when adding l2 regularization. This tutorial will help you.

List All Trainable and Untrainable Variables in TensorFlow

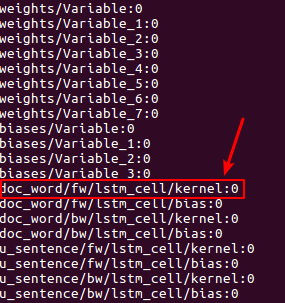

After listing all trainable variables, you can check all weights and bias. Here is an example:

We can find the name of all bias variables often contain string ‘bias’, but weights do not contain, which can help us to exclude all bias variables in all trainable variables.

There is a tensorflow tips when we are building models: When you are creating weights and bias variables for nerual networks in tensorflow, you should named bias variables by containing ‘bias’ string, however weights variables excluding ‘bias’ string.

Implement l2 regularization

After getting all weights variables in neural networks, we can calculate l2 penalty very easily.

Here is an example:

final_loss = tf.reduce_mean(losses + 0.001 * tf.reduce_sum([ tf.nn.l2_loss(n) for n in tf.trainable_variables() if 'bias' not in n.name]))

where we set λ = 0.001, you can use this code on various nerual networks, not matter how it simple or complex.