Tree LSTM model is widely used in many deep learning fields. It is often used to process tree structure data.

For example:

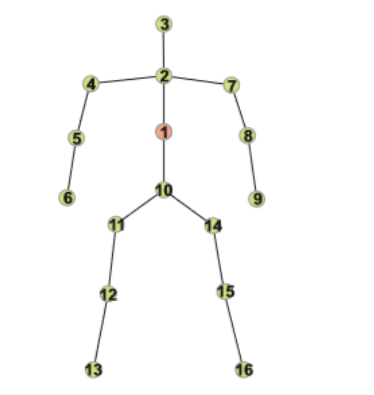

Action recognition is tree structure data.

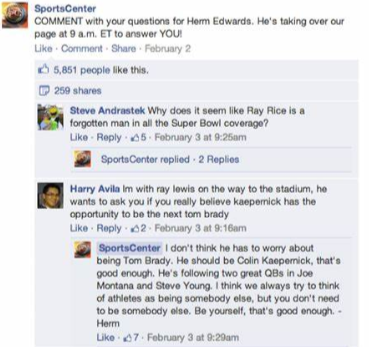

Social-media conversations are also tree structure data. One post contains some replies, a reply also contians some replies.

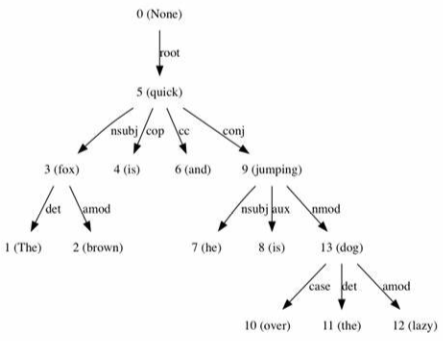

Non-tree structure data also can be converted to tree strucutre, for example, use dependency parsing technology to convert a sententce to a tree.

We have introduced the basic LSTM, Tree LSTM is much similar to it.

Understand Long Short-Term Memory Network(LSTM) – LSTM Tutorial

The structure of basic lstm likes:

We can find Ct and ht are only determined by one Ct-1 and ht-1. However, in Tree LSTM, each Ct and ht are determined by multiple Ct-1, Ct-2…. and ht-1, ht-2…, which is the key difference between classic lstm and tree lstm.

What is Tree LSTM?

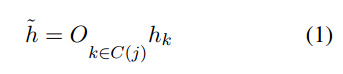

A Tree LSTM Model can be defined as:

where C(j) denotes the set of children of node j and Ok is an operator that acts on the hidden vector hk of child k to output ![]() .

.

Then we can define Tree LSTM equations as follows:

From equations above, we can find:

- The input gate and output gate are calculated base on x and

- The forget gate is calculated based on x and each hk, which is different from the input and forget gate.

How to get ![]() ?

?

We shoud get ![]() by its child nodes, there are some ways to get it.

by its child nodes, there are some ways to get it.

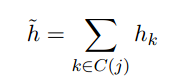

Child Sum Tree Unit

The child-sum unit involves using sum of all hk vectors which means O = ∑. Therefore

where k this the number of child nodes of a parent node.

Limited: This method views weights of child nodes equally.

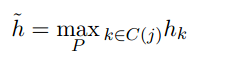

Child Max-Pooling Unit

The child max-pooling unit involves using the maximum of all hk vectors across a dimension.

Limited: This method only picks maximum value.

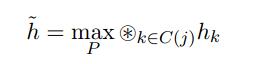

Child Convolve + MaxPooling Tree Unit

Child convolve uses convolution operation of the set of child hidden vectors i.e. O=⊗ where ⊗ denotes vector convolution operation. As a normal tree node can have any number of child nodes, convolution operation using all child nodes requires a max-pooling operation to preserve the dimension of ![]() .

.

where ⊗ denotes vector convolution operation and maxP denotes max pooling operation.

Limited: This method only picks the convoluted features with maximum value.