CNN networks are widely used in deep learning, in this tutorial, we will build a cnn network for mnist handwritten digits classification. It will help you understand how to use cnn in deep learning.

The structure of CNN network

The basic structure fo a CNN network looks like:

We will use this structure to build a CNN network for mnist handwritten digits classification.

Load MNIST data

We should import MNIST data first.

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data import os import numpy as np import random mnist = input_data.read_data_sets(os.getcwd() + "/MNIST-data/", one_hot=True)

Then, we can prepare data that can be used by a cnn network.

Prepare data

x = tf.placeholder(tf.float32, [None, 784]) y_label = tf.placeholder(tf.float32, [None, 10]) X_images = tf.reshape(x, [-1, 28, 28, 1])

This code will make X_images can be passed into a cnn network.

Create some hyperparameters

These hyperparameters can be used in our example.

learning_rate = 1e-3 total_steps = 1000 category_num = 10 steps_per_validate = 15 steps_per_test = 15 batch_size = 64

Then we can start to build a cnn network.

We will use tensorflow tf.nn.conv2d() and tf.nn.max_pool(). You can learn how to use them in these two tutorials.

Understand tf.nn.conv2d(): Compute a 2-D Convolution in TensorFlow

Understand TensorFlow tf.nn.max_pool(): Implement Max Pooling for Convolutional Network

How to implement Convolution+ReLU

Convolution+ReLU is the basic operation of a cnn notwork, we can use tf.nn.conv2d() and tf.nn.relu() to implement it. Here is an example:

conv1_Weights = tf.Variable(tf.truncated_normal([5, 5, 1, 32], stddev=0.1), name='conv1_Weights') # out_channels = 32 conv1_biases = tf.Variable(tf.constant(0.1, shape=[32]), name='conv1_biases') #[batch, out_height, out_width, out_channels] #out_channels = 32 conv1_conv2d = tf.nn.conv2d(X_images, conv1_Weights, strides=[1, 1, 1, 1], padding='SAME') + conv1_biases conv1_activated = tf.nn.relu(conv1_conv2d)

How to implement pooling

In this tutorial, we will use max_pooling operation, here is an example:

# channels = 32 conv1_pooled = tf.nn.max_pool(conv1_activated, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

Then we can repeat Convolution+ReLU+pooling operations

conv2_Weights = tf.Variable(tf.truncated_normal([5, 5, 32, 64], stddev=0.1), name='conv2_Weights') conv2_biases = tf.Variable(tf.constant(0.1, shape=[64]), name='conv2_biases') conv2_conv2d = tf.nn.conv2d(conv1_pooled, conv2_Weights, strides=[1, 1, 1, 1], padding='SAME') + conv2_biases conv2_activated = tf.nn.relu(conv2_conv2d) #[batch, out_height, out_width, channels] # channels = 64 conv2_pooled = tf.nn.max_pool(conv2_activated, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

How to implement Fully Connected operation

A fully connected layer can be created as following:

dim_w1= 7 * 7 * 64 connect1_flat = tf.reshape(conv2_pooled, [-1, dim_w1]) connect1_Weights = tf.Variable(tf.truncated_normal([dim_w1, 1024], stddev=0.1), name='connect1_Weights') connect1_biases = tf.Variable(tf.constant(0.1, shape=[1024]), name='connect1_biases') connect1_Wx_plus_b = tf.add(tf.matmul(connect1_flat, connect1_Weights), connect1_biases) connect1_activated = tf.nn.relu(connect1_Wx_plus_b)

Then we can get final output to classify.

#full connected layer 2 connect2_Weights = tf.Variable(tf.truncated_normal([1024, 10], stddev=0.1), name='connect2_Weights') connect2_biases = tf.Variable(tf.constant(0.1, shape=[10]), name='connect2_biases') y = tf.add(tf.matmul(connect1_activated, connect2_Weights), connect2_biases)

Make predication

# Loss cross_entropy = tf.nn.softmax_cross_entropy_with_logits(labels=y_label, logits=y) train = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cross_entropy) # Prediction correction_prediction = tf.equal(tf.argmax(y, axis=1), tf.argmax(y_label, axis=1)) accuracy = tf.reduce_mean(tf.cast(correction_prediction, tf.float32))

Finally, we can start to train this model

Start to train cnn network

init = tf.global_variables_initializer()

try:

with tf.Session() as sess:

sess.run(init)

test_acc = 0.

dev_acc = 0.

better_acc = 0.0

#set train times

for step in range(total_steps + 1):

batch_x, batch_y = mnist.train.next_batch(batch_size)

_, acc = sess.run([train, accuracy] , feed_dict={x: batch_x, y_label: batch_y})

print("train step="+str(step) +" accuracy = " + str(acc))

if step % steps_per_validate == 0:

dev_x, dev_y = mnist.validation.images, mnist.validation.labels

dev_acc = sess.run(accuracy,feed_dict = {x: dev_x, y_label: dev_y})

print("dev step="+str(step) +" accuracy = " + str(dev_acc))

if better_acc < dev_acc:

test_x, test_y = mnist.test.images, mnist.test.labels

test_acc = sess.run(accuracy,feed_dict = {x: test_x, y_label: test_y})

print("test step="+str(step) +" accuracy = " + str(test_acc))

better_acc = dev_acc

#final

dev_x, dev_y = mnist.validation.images, mnist.validation.labels

dev_acc = sess.run(accuracy,feed_dict = {x: dev_x, y_label: dev_y})

except Exception as e:

print(e)

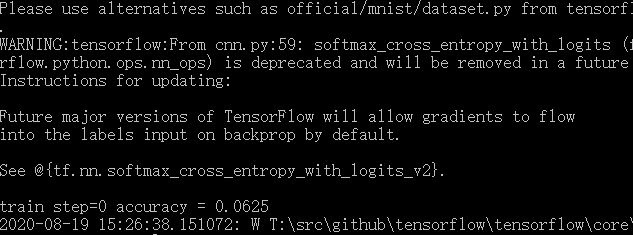

Run this code, you will get the training result.