Leaky ReLU is an activation function in deep learning, it often is used in graph attention networks. In this tutorial, we will introduce it for deep learning beginners.

What is Leaky ReLU?

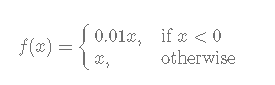

Mathematically, Leaky ReLU is defined as follows (Maas et al., 2013)

The graph of it is:

How to compute Leaky ReLU?

In tensorflow, we can use tf.nn.leaky_relu() function to compute it.