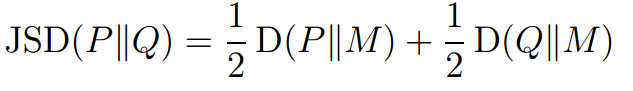

Jensen-Shannon Divergence is a smoothed and important divergence measure of informaton theory. It is defined as:

where M = (P+M)/2

D(P||M) and D(Q||M) is Kullback-Leibler Divergence.

Features:

1.JSD(P||Q) in [0, 1]. When JSD = 0, P and Q is the same, JSD = 1, they are different.

2.JSP(P||Q) = JSP(Q||P), which is different from DK(P||Q) and DK(Q||P)