LSTMP (LSTM with Recurrent Projection Layer) is an improvement of LSTM with peephole conncections. In this tutorial, we will introduce this model for LSTM Beginners.

Compare LSTMP and LSTM with with peephole conncections

LSTMP is proposed in paper LONG SHORT-TERM MEMORY BASED RECURRENT NEURAL NETWORK ARCHITECTURES FOR LARGE VOCABULARY SPEECH RECOGNITION.

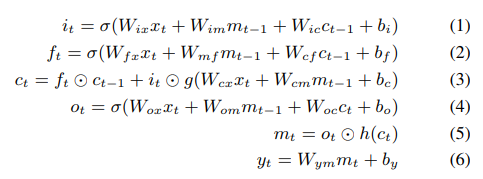

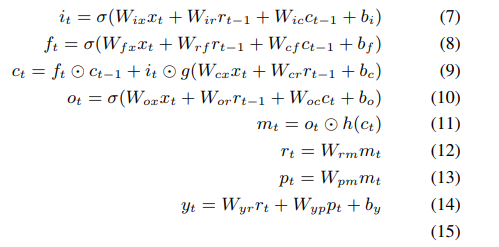

| LSTM with peephole conncections | LSTMP |

|

|

\(m_t\) is the output of the current lstm cell. As to equations (1)-(5) and (7)-(11), they are the same.

We usually set \(y_t = m_t\) in LSTM. However, we add a recurrent projection layer in LSTMP.

This recurrent projection layer is:

\[r_t = W_{rm}m_t\]

\(r_t\) is the output of the current lstm cell. We use a weight \(W_{rm}\) to compress or enlarge \(m_t\).

For example:

If the shape of \(m_t\) is 1*200, \(W_{rm}\) is 200* 100. The shape of \(r_t\) is 1* 100. However, if the shape of \(W_{rm}\) is 200* 300. The output of \(r_t\) will be 1 * 300.