Sinusoidal position encoding or postion embedding is used in transformer. In this tutorial, we will introduce you how to implement it using tensorflow.

Sinusoidal position encoding

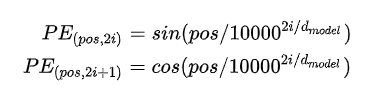

This encoding is defined as:

To understand it, you can read this tutorial:

Understand Position Encoding in Deep Learning: A Beginner Guide – Deep Learning Tutorial

How to implement position encoding in tensorflow?

Here we will create a class to do it.

For example:

import tensorflow as tf

class PositionalEncoding_Layer():

"""

Implements the sinusoidal positional encoding function

Based on https://nlp.seas.harvard.edu/2018/04/03/attention.html#positional-encoding

"""

def __init__(self):

pass

def encode(self, max_len, d_model):

pe = np.zeros([max_len, d_model], dtype=np.float32)

position = np.expand_dims(np.arange(0, max_len, dtype=np.float32), axis=1)

div_term = np.exp(np.arange(0.0, float(d_model), 2.0, dtype=np.float32) * -(np.log(10000.0) / float(d_model)))

pe[:, 0::2] = np.sin(position * div_term)

pe[:, 1::2] = np.cos(position * div_term)

pe = tf.convert_to_tensor(pe, dtype=tf.float32)

pe = tf.expand_dims(pe, axis=0)

return pe

# batch_size, seq_max_len, dim

def call(self, inputs, max_len, inputs_dim):

#max_len, d_model = tf.shape(inputs)[-2], tf.shape(inputs)[-1]

pe = self.encode(max_len, inputs_dim)

return peThen we can evalute it.

if __name__ == "__main__":

import numpy as np

batch_size, sql_max_len, dim = 3, 20, 200

encoder_dim = 30

inputs = tf.random.uniform((batch_size, sql_max_len, dim), minval=-10, maxval=10)

pe_layer = PositionalEncoding_Layer()

outputs = pe_layer.call(inputs, 20, 200)

init = tf.global_variables_initializer()

init_local = tf.local_variables_initializer()

with tf.Session() as sess:

sess.run([init, init_local])

np.set_printoptions(precision=4, suppress=True)

a = sess.run(outputs)

print(a.shape)

print(a)Here sql_max_len = 20, dim = 200, it means will create a position encoding matrix with 20*200.

Run this code, we will see:

(1, 20, 200) [[[ 0. 1. 0. ... 1. 0. 1. ] [ 0.8415 0.5403 0.7907 ... 1. 0.0001 1. ] [ 0.9093 -0.4161 0.9681 ... 1. 0.0002 1. ] ... [-0.9614 -0.2752 0.2024 ... 1. 0.0019 1. ] [-0.751 0.6603 -0.6505 ... 1. 0.002 1. ] [ 0.1499 0.9887 -0.9988 ... 1. 0.0021 1. ]]]

Here we use tf.expand_dims() to expand the dimension of position encoding. We will get the shape (1, 20, 200)

The position encoding looks like:

To plot multiple lines in a chart, you can read:

Step Guide to Plot Multiple Lines in Matplotlib – Matplotlib Tutorial