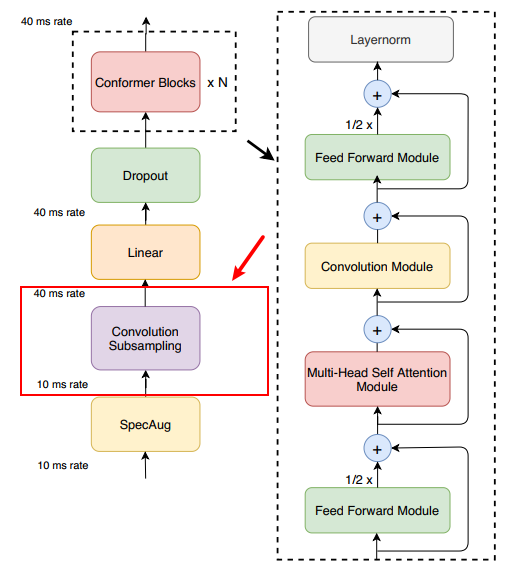

Convolution subsampling module is an important part for processing inputs in conformer. In this tutorial, we will introduce how to implement it using tensorflow.

As figure above, we can find where convolution subsampling is in conformer.

How to implement it in tensorflow?

We will refer codes below to create. It aims to convert input tensor to 1/4 length with convolutional 2D subsampling.

https://github.com/sooftware/conformer/blob/9318418cef5b516cd094c0ce2a06b80309938c70/conformer/convolution.py#L152

https://github.com/thanhtvt/conformer/blob/master/conformer/subsampling.py

For these codes, we find:

1. the input tensor is batch_size * sequence_len * dim, then it will be reshaped to batch_size*1*sequence_len*dim

For example:

outputs = self.sequential(inputs.unsqueeze(1))

2. There are two convolution layers with kernel_size = 3 and strides = 2

self.sequential = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=2),

nn.ReLU(),

nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=2),

nn.ReLU(),

)Dropout layer is also can be implemented. However, we think batch normalization is much better.

Dropout vs Batch Normalization – Which is Better for Multilayered Neural Network

Then, our example code is below:

class ConvSubsampling_Layer():

def __init__(self):

pass

#inputs: batch_size * seq_length * dim

def call(self, inputs, filters = [512, 512], kernel_size = [3, 3], trainable = True):

inputs = tf.expand_dims(inputs, axis = 1) # batch_size * 1 * seq_length * dim

x = inputs

num_layers_per_block = len(filters)

for i in range(num_layers_per_block):

conv_name = 'conv' + str(i) + '_' + str(i) + '_3x3'

bn_name = 'conv' + str(i) + '_' + str(i) + '_3x3/bn'

x = tf.layers.conv2d(x, filters[i], kernel_size[i],

padding='same',

strides= 2,

kernel_initializer=tf.orthogonal_initializer(),

use_bias=True,

trainable=True,

name=conv_name

)

x = tf.layers.batch_normalization(x, axis=-1, training=trainable, name=bn_name)

x = tf.nn.relu(x)

return x

Then, we can evaluate it.

if __name__ == "__main__":

import numpy as np

batch_size, seq_len, dim = 3, 12, 80

inputs = tf.random.uniform((batch_size, seq_len, dim), minval=-10, maxval=10)

sampling_layer = ConvSubsampling_Layer()

outputs = sampling_layer.call(inputs)

init = tf.global_variables_initializer()

init_local = tf.local_variables_initializer()

with tf.Session() as sess:

sess.run([init, init_local])

np.set_printoptions(precision=4, suppress=True)

a = sess.run(outputs)

print(a.shape)

print(a)

Here inputs is 3* 12 * 80, it will output (3, 1, 3, 512), there sequence length of output is 12/3.

Run this code, we will get:

(3, 1, 3, 512) [[[[0. 0. 0. ... 0.301 0. 1.2709] [0.4476 0.6557 1.0563 ... 0. 0. 1.0227] [1.7737 0. 0. ... 0. 0. 0.7494]]] [[[0. 1.4702 0.2812 ... 0. 0. 0. ] [0. 0.1001 0.809 ... 0. 2.5678 0.9893] [1.3314 0. 0.1156 ... 0.7531 0.1981 0. ]]] [[[0.1544 0. 0.7149 ... 1.1866 0.3391 0. ] [0.0516 0. 0.5869 ... 0.8839 0. 0. ] [0. 1.6138 0. ... 0.6568 0. 0. ]]]]