conv(weight)+BN+ReLU is the basic operation when building convolutional networks. How about conv(weight)+ReLU+BN? In this tutorial, we will discuss this topic.

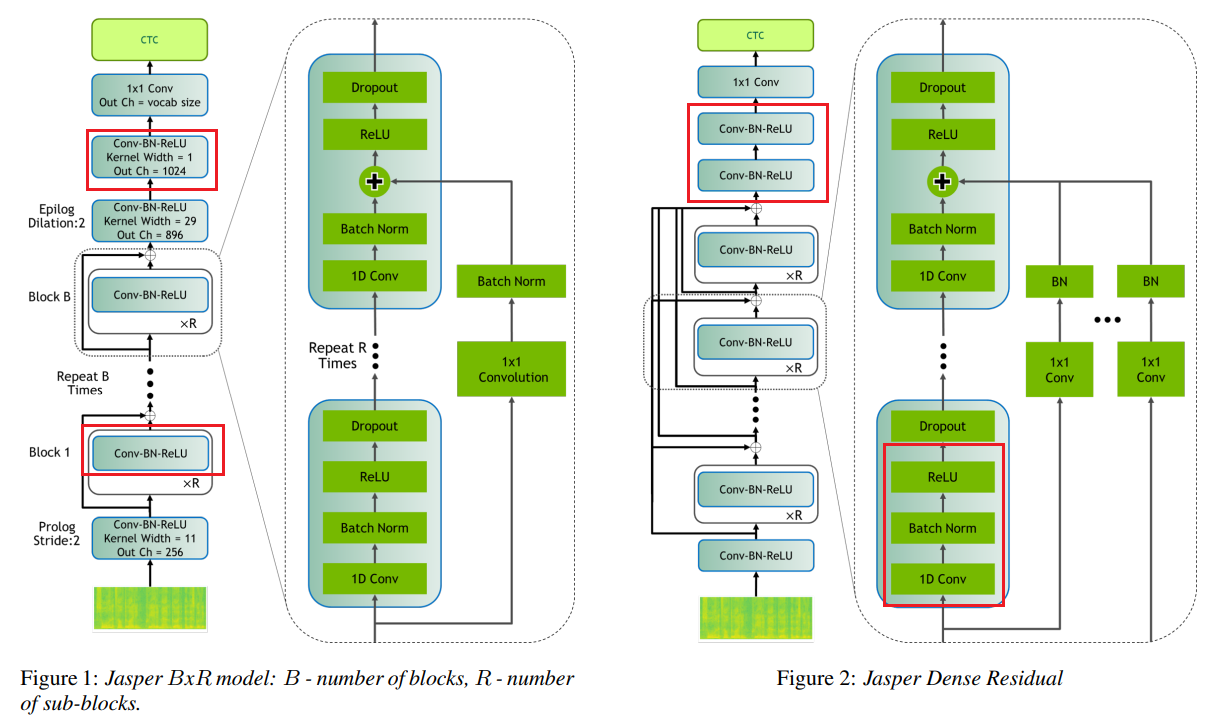

As to sesidual networks (ResNets), a residual block is defined as:

We can find BN is before ReLU.

Exploring Normalization in Deep Residual Networks with Concatenated Rectified Linear Units

From paper: Identity Mappings in Deep Residual Networks, we can also find: BN is before ReLU

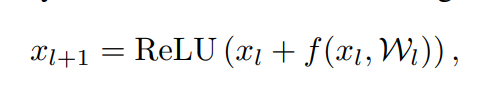

From paper: Jasper: An End-to-End Convolutional Neural Acoustic Model, we also can find: BN is before ReLU

However, we have not found a definitive statement on whether BN should be before or after ReLU, but from the available papers, we recommend that BN is before ReLU.