In pytorch, we can use torch.save() to save a trained model. Here is a tutorial:

Save and Load Model in PyTorch: A Completed Guide – PyTorch Tutorial

When we plan to save a pytorch model. There two methods:

Method 1: only save model weights

We can use torch.save(model.state_dict(), PATH) to implement.

This way allows us to initialize our model from a pretrained model easily.

Initialize a PyTorch Model From a Pretrained Model – PyTorch Tutorial

Method 2: save a model object

Here is an example:

state = {'epoch': epoch,

'epochs_since_improvement': epochs_since_improvement,

'loss': loss,

'model': model,

'optimizer': optimizer}

filename = 'checkpoint.tar'

torch.save(state, filename)Here model is a torch model object.

This way can make us continue to train easily.

However, here is a question: If we have saved a model, can we change our model structure before we plan to load this saved model? In this tutorial, we will discuss this topic.

Preliminary

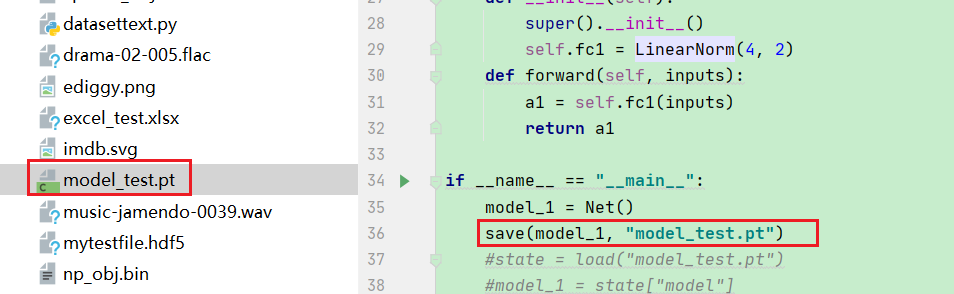

First, we will create a simple torch model and save this model object.

For example:

import numpy as np

import torch

import torch.nn.functional as F

import torch.nn as nn

def save(model, path):

state = {"model": model}

torch.save(state, path, _use_new_zipfile_serialization=True)

def load(path):

return torch.load(path, map_location="cpu")

class LinearNorm(torch.nn.Module):

def __init__(self, in_dim, out_dim, bias=True, w_init_gain='linear'):

super(LinearNorm, self).__init__()

self.linear_layer = torch.nn.Linear(in_dim, out_dim, bias=bias)

torch.nn.init.xavier_uniform_(

self.linear_layer.weight,

gain=torch.nn.init.calculate_gain(w_init_gain))

def forward(self, x):

return self.linear_layer(x)

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

def forward(self, inputs):

a1 = self.fc1(inputs)

return a1

if __name__ == "__main__":

model_1 = Net()

save(model_1, "model_test.pt")In this example, we have created a simple torch model Net and create a model object model_1. Then, we save it to model_test.pt.

Load and print model

Then, we can load our saved model and print it.

import numpy as np

import torch

import torch.nn.functional as F

import torch.nn as nn

def save(model, path):

state = {"model": model}

torch.save(state, path, _use_new_zipfile_serialization=True)

def load(path):

return torch.load(path, map_location="cpu")

class LinearNorm(torch.nn.Module):

def __init__(self, in_dim, out_dim, bias=True, w_init_gain='linear'):

super(LinearNorm, self).__init__()

self.linear_layer = torch.nn.Linear(in_dim, out_dim, bias=bias)

torch.nn.init.xavier_uniform_(

self.linear_layer.weight,

gain=torch.nn.init.calculate_gain(w_init_gain))

def forward(self, x):

return self.linear_layer(x)

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

def forward(self, inputs):

a1 = self.fc1(inputs)

return a1

if __name__ == "__main__":

model_1 = load("model_test.pt")

print(model_1)

Run this code, we can see:

{'model': Net(

(fc1): LinearNorm(

(linear_layer): Linear(in_features=4, out_features=2, bias=True)

)

)}

It is correct. However, there are some questions we should answer?

Question 1: can we remove model Net source code when loading model?

For example:

It is:

import numpy as np

import torch

import torch.nn.functional as F

import torch.nn as nn

def save(model, path):

state = {"model": model}

torch.save(state, path, _use_new_zipfile_serialization=True)

def load(path):

return torch.load(path, map_location="cpu")

class LinearNorm(torch.nn.Module):

def __init__(self, in_dim, out_dim, bias=True, w_init_gain='linear'):

super(LinearNorm, self).__init__()

self.linear_layer = torch.nn.Linear(in_dim, out_dim, bias=bias)

torch.nn.init.xavier_uniform_(

self.linear_layer.weight,

gain=torch.nn.init.calculate_gain(w_init_gain))

def forward(self, x):

return self.linear_layer(x)

if __name__ == "__main__":

model_1 = load("model_test.pt")

print(model_1)

In this code, we have removed the definition of model Net.

Run this code, we will see this error: AttributeError

This result means that we must declare the definition of a model when loading a saved model.

Question 2: can we change the parameters and operations for existing method in model?

We can use our saved model as follows:

import numpy as np

import torch

import torch.nn.functional as F

import torch.nn as nn

def save(model, path):

state = {"model": model}

torch.save(state, path, _use_new_zipfile_serialization=True)

def load(path):

return torch.load(path, map_location="cpu")

class LinearNorm(torch.nn.Module):

def __init__(self, in_dim, out_dim, bias=True, w_init_gain='linear'):

super(LinearNorm, self).__init__()

self.linear_layer = torch.nn.Linear(in_dim, out_dim, bias=bias)

torch.nn.init.xavier_uniform_(

self.linear_layer.weight,

gain=torch.nn.init.calculate_gain(w_init_gain))

def forward(self, x):

return self.linear_layer(x)

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

def forward(self, inputs):

a1 = self.fc1(inputs)

return a1

if __name__ == "__main__":

model_1 = load("model_test.pt")

model_1 = model_1["model"]

x1 = torch.randn((6, 4))

y = model_1(x1)

print(y)

Run this code, we can see:

tensor([[ 1.4922, -0.9868],

[-0.7774, 0.0537],

[ 2.0040, -1.6092],

[-0.0588, 1.5376],

[-0.2348, 1.0429],

[ 0.7259, -1.8557]], grad_fn=<AddmmBackward0>)

Then, we will add a parameter for Net forward() method.

For example:

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

def forward(self, inputs, input_2):

a1 = self.fc1(inputs) + 10.8 * input_2

return a1

Here we have added a input_2 parameter and change the operations in forward() function.

if __name__ == "__main__":

model_1 = load("model_test.pt")

model_1 = model_1["model"]

x1 = torch.randn((6, 4))

x2 = torch.randn((6, 2))

y = model_1(x1, x2)

print(y)

Run this code, we will see:

tensor([[ 5.2701, 21.3095],

[ -7.3446, 18.9845],

[ -6.0335, -19.8294],

[ 16.0848, 7.6931],

[-33.1544, 19.2937],

[ -0.8184, 7.3136]], grad_fn=<AddBackward0>)

We can find model_1 will be run by new forward() function.

Question 3: can we add new methods for model?

We can modify Net model as follows:

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

def forward(self, inputs):

a1 = self.fc1(inputs)

return a1

def add(self, x1, x2):

x1 = self.fc1(x1)

return x1 + 10* x2

Here we have added a new method add() for Net model.

We will use it as follows:

if __name__ == "__main__":

model_1 = load("model_test.pt")

model_1 = model_1["model"]

x1 = torch.randn((6, 4))

x2 = torch.randn((6, 2))

y = model_1.add(x1, x2)

print(y)

Run this code, we will see:

tensor([[ -9.6926, 0.0335],

[ -5.3403, -23.4950],

[ -6.2144, 4.2127],

[-14.0001, -23.4343],

[ 0.6301, -0.8062],

[ -4.2495, -0.2431]], grad_fn=<AddBackward0>)

We can find new method add() is run successfully.

Question 4: can we add new attribution or weight in Net model?

From question 3, we can add new method for saved model, here we will test can we add new attribution or weight.

For example:

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

self.fc2 = LinearNorm(4, 2)

def forward(self, inputs):

a1 = self.fc1(inputs)

return a1

if __name__ == "__main__":

model_1 = load("model_test.pt")

print(model_1)

model_1 = model_1["model"]

x1 = torch.randn((6, 4))

y = model_1(x1)

print(y)

Here we have added new attribution self.fc2 for Net model. Run this code, we will see:

{'model': Net(

(fc1): LinearNorm(

(linear_layer): Linear(in_features=4, out_features=2, bias=True)

)

)}

tensor([[-1.0440, 2.6193],

[ 1.9054, -1.3289],

[-0.4548, 0.5769],

[ 1.9590, -1.2509],

[-0.0232, 0.6246],

[ 2.3149, -2.1487]], grad_fn=<AddmmBackward0>)

It means we can add new attribution or weight for an existing model. However, the new attribution self.fc2 does not be in model_1.

Question 5: can we use new attribution or weight in existing method?

As question 4, we continue modify Net model.

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

self.fc2 = LinearNorm(4, 2)

def forward(self, inputs):

a1 = self.fc2(inputs)

return a1

if __name__ == "__main__":

model_1 = load("model_test.pt")

print(model_1)

model_1 = model_1["model"]

x1 = torch.randn((6, 4))

y = model_1(x1)

print(y)

Run this code, we will see:

AttributeError: 'Net' object has no attribute 'fc2'

Following by question 4, because fc2 is not in model_1.

More examples:

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = LinearNorm(4, 2)

self.fc2 = LinearNorm(4, 2)

def forward(self, inputs):

a1 = self.fc2(inputs)

return a1

def add(self, x1, x2):

x1 = self.fc2(x1)

return x1+ 10.0*x2

if __name__ == "__main__":

model_1 = load("model_test.pt")

print(model_1)

model_1 = model_1["model"]

x1 = torch.randn((6, 4))

x2 = torch.randn((6, 2))

y = model_1.add(x1, x2)

print(y)

We will get:

{'model': Net(

(fc1): LinearNorm(

(linear_layer): Linear(in_features=4, out_features=2, bias=True)

)

)}

AttributeError: 'Net' object has no attribute 'fc2'

To summarize, we get these conclusions:

- We can use torch.save() to save a torch model object

- Only attributions or weights are saved

- We can add new attributions or weights in model, however, these new attributions or weights can not be used new or existing methods.

- We can add new methods for model

- We can change the parameters or operations for existing methods in model