In this tutorial, we will introdue the implementation of basic RNN in tensorflow. You can understand it more clearly.

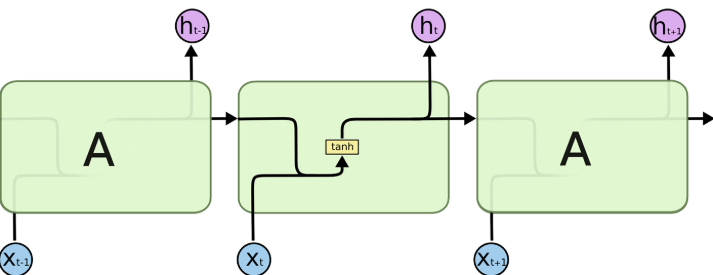

Structure of basic RNN

The basic rnn looks like:

It can be defined as:

\[h_t = tanh(Wx_t+Uh_{t-1}+b)\]

Here \(W\) and \(U\) is the weight matrix, \(W \in R ^{m*n}\) and \(U \in R ^{n*n} \)

How to implement basic RNN in TensorFlow?

We can find the source code in here:

https://github.com/tensorflow/tensorflow/blob/r1.8/tensorflow/python/ops/rnn_cell_impl.py

Class BasicRNNCell is the basic RNN.

The core code is:

def call(self, inputs, state):

"""Most basic RNN: output = new_state = act(W * input + U * state + B)."""

gate_inputs = math_ops.matmul(

array_ops.concat([inputs, state], 1), self._kernel)

gate_inputs = nn_ops.bias_add(gate_inputs, self._bias)

output = self._activation(gate_inputs)

return output, outputHowever, we usually do not use basic RNN in our model, because there are gradient vanishing and exploding problems in it.