Both Batch Normalization and Layer Normalization can normalize the input \(x\). What is the difference between them. In this tutorial, we will introduce it.

Batch Normalization Vs Layer Normalization

Batch Normalization and Layer Normalization can normalize the input \(x\) based on mean and variance.

Layer Normalization Explained for Beginners – Deep Learning Tutorial

Understand Batch Normalization: A Beginner Explain – Machine Learning Tutorial

The key difference between Batch Normalization and Layer Normalization is:

How to compute the mean and variance of input \(x\) and use them to normalize \(x\).

As to batch normalization, the mean and variance of input \(x\) are computed on batch axis. We can find the answer in this tutorial:

Understand the Mean and Variance Computed in Batch Normalization – Machine Learning Tutorial

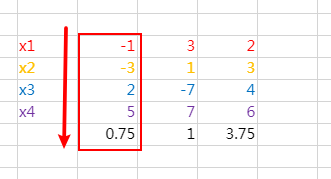

As to input \(x\), the shape of it is 64*200, the batch is 64.

Batch Normalization can normalize input \(x\) as follows:

However, layer normalization usually normalize input \(x\) on the last axis and use it to normalize recurrent neural networks. For example:

Normalize the Output of BiLSTM Using Layer Normalization

Batch Normalization can normalize input \(x\) as follows:

It means we will compute the mean and variance of input \(x\) based on the row, not column.