In this tutorial, we will use tensorflow to build our own LSTM model, not use tf.nn.rnn_cell.BasicLSTMCell(). You can create a customized lstm by it.

LSTM Model

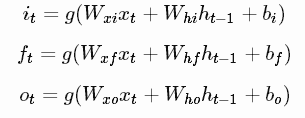

The structure of a lstm likes:

It contains three gats, they are:

To know more about lstm, you can read:

Understand Long Short-Term Memory Network(LSTM) – LSTM Tutorial

How to build our own LSTM Model

We will create a LSTM class with tensorflow. Here is full example code.

#file name: lstm.py

import tensorflow as tf

import numpy as np

class LSTM():

'''

num_emb: word embeddings dim, such as 200

inputs: batch_size * time_step * dim

'''

def __init__(self,inputs, emb_dim, hidden_dim, sequence_length):

self.emb_dim = emb_dim

self.hidden_dim = hidden_dim

self.sequence_length = sequence_length

self.batch_size = tf.shape(inputs)[0]

self.inputs = tf.transpose(inputs, perm=[1, 0, 2])

with tf.variable_scope('lstm_init'):

self.g_recurrent_unit = self.create_recurrent_unit() # maps h_tm1 to h_t for generator

self.g_output_unit = self.create_output_unit() # maps h_t to o_t (output token logits)

x0 = tf.nn.embedding_lookup(self.inputs,0)

# Initial states, such as 100 * 200

self.h0 = tf.zeros([self.batch_size, self.hidden_dim])

self.h0 = tf.stack([self.h0, self.h0])

gen_o = tf.TensorArray(dtype=tf.float32, size=self.sequence_length,

dynamic_size=False, infer_shape=True)

#x_t is current input, h_tm1 is hidden output of last cell

def _g_recurrence(i, x_t, h_tm1, gen_o):

h_t = self.g_recurrent_unit(x_t, h_tm1)

o_t = self.g_output_unit(h_t) # batch x 200

gen_o = gen_o.write(i, o_t)# save each hidden output

i_next = tf.where(tf.less(i, self.sequence_length-1), i+1, self.sequence_length-1)

x_t_next = tf.nn.embedding_lookup(self.inputs,i_next) #batch x emb_dim

return i+1, x_t_next, h_t, gen_o

#loop

i_l, _, h_l_t, self.gen_o = tf.while_loop(

cond=lambda i, _1, _2, _3: i < self.sequence_length, # stop condition

body=_g_recurrence,

loop_vars=(tf.constant(0, dtype=tf.int32), #start condition

tf.nn.embedding_lookup(self.inputs,0),

self.h0, gen_o))

self.gen_o = self.gen_o.stack() # seq_length x batch_size

self.gen_o = tf.transpose(self.gen_o, perm=[1, 0, 2]) # batch_size x seq_length * 200, output

# initialize matrix

def init_matrix(self, shape):

return tf.random_normal(shape, stddev=0.1)

# initialize vector

def init_vector(self, shape):

return tf.zeros(shape)

def create_recurrent_unit(self):

# Weights and Bias for input and hidden tensor

# input gate

self.Wi = tf.Variable(self.init_matrix([self.emb_dim, self.hidden_dim]), name = 'input_gate_wi')

self.Ui = tf.Variable(self.init_matrix([self.hidden_dim, self.hidden_dim]), name = 'input_gate_ui')

self.bi = tf.Variable(self.init_matrix([self.hidden_dim]), name = 'input_gate_bias')

# forget gate

self.Wf = tf.Variable(self.init_matrix([self.emb_dim, self.hidden_dim]), name = 'forget_gate_wf')

self.Uf = tf.Variable(self.init_matrix([self.hidden_dim, self.hidden_dim]), name = 'forget_gate_wf')

self.bf = tf.Variable(self.init_matrix([self.hidden_dim]), name = 'forget_gate_bias')

# ouput gate

self.Wog = tf.Variable(self.init_matrix([self.emb_dim, self.hidden_dim]), name = 'output_gate_wo')

self.Uog = tf.Variable(self.init_matrix([self.hidden_dim, self.hidden_dim]), name = 'output_gate_uo')

self.bog = tf.Variable(self.init_matrix([self.hidden_dim]), name = 'output_gate_bias')

# control gate

self.Wc = tf.Variable(self.init_matrix([self.emb_dim, self.hidden_dim]), name = 'control_gate_wc')

self.Uc = tf.Variable(self.init_matrix([self.hidden_dim, self.hidden_dim]), name = 'control_gate_uc')

self.bc = tf.Variable(self.init_matrix([self.hidden_dim]), name = 'control_gate_bias')

# a lstm unit, x is input, hidden_memory_tm1 is output of last cell, it contians two part (output, state)

def unit(x, hidden_memory_tm1):

previous_hidden_state, c_prev = tf.unstack(hidden_memory_tm1) # get output and state of last cell

# Input Gate

i = tf.sigmoid(

tf.matmul(x, self.Wi) +

tf.matmul(previous_hidden_state, self.Ui) + self.bi

)

# Forget Gate

f = tf.sigmoid(

tf.matmul(x, self.Wf) +

tf.matmul(previous_hidden_state, self.Uf) + self.bf

)

# Output Gate

o = tf.sigmoid(

tf.matmul(x, self.Wog) +

tf.matmul(previous_hidden_state, self.Uog) + self.bog

)

# New Memory Cell

c_ = tf.nn.tanh(

tf.matmul(x, self.Wc) +

tf.matmul(previous_hidden_state, self.Uc) + self.bc

)

# Final Memory cell

c = f * c_prev + i * c_

# Current Hidden state

current_hidden_state = o * tf.nn.tanh(c)

# output

return tf.stack([current_hidden_state, c])

return unit

#output of each lstm unit, you can customize it

def create_output_unit(self):#, params:

def unit(hidden_memory_tuple):

hidden_state, c_prev = tf.unstack(hidden_memory_tuple)

return hidden_state#logits

return unit

There are some poits you should notice:

1. Set initial output and state

We set both of them are 0, you can customize them.

# Initial states, such as 100 * 200

self.h0 = tf.zeros([self.batch_size, self.hidden_dim])

self.h0 = tf.stack([self.h0, self.h0])2. tf.while_loop() is the main of lstm

To know more tf.while_loop(), you can read:

Understand TensorFlow tf.while_loop(): Repeat a Function – TensorFlow Tutorial

3. We use a tensorarray to save the output and state of each lstm cell, you should notice:

gen_o = tf.TensorArray(dtype=tf.float32, size=self.sequence_length,

dynamic_size=False, infer_shape=True)

dynamic_size=False, it means gen_o is a fixed size tensorarray, meanwhile, it only can be read once.

You can read more on tensorarray.

Understand TensorFlow TensorArray: A Beginner Tutorial – TensorFlow Tutorial

4. If you want to add more gates or modify lstm gates, you can do it in unit(x, hidden_memory_tm1) function.

5. If you plan to modify the output of each lstm unit, you can edit create_output_unit(self) function.

How to use this model?

Here is an example:

custom_lstm = lstm.LSTM(x_shape, emb_dim =input_size, hidden_dim = hidden_dim, sequence_length = time_step) output = custom_lstm.gen_o # batch_size x seq_length * 200