If you are using LSTM or BiLSTM in tensorflow, you have to process variable length sequence, especially you have added an attention mechanism in your model. In this tutorial, we will introduce you how to calculate the attention of variable length sequence in tensorflow.

Preliminaries

import tensorflow as tf import numpy as np

Prepare data

#a is atttention value by softmax on batch_size * time_step * input_size a = np.array([[[0.2],[0.3],[0.4],[0.1]],[[0.3],[0.1],[0.2],[0.4]]]) #l is the valid sequence length,size is [batch_size], the first is 2, and the second is 3 #so,[[[0.2],[0.3],[0.4],[0.1]],[[0.3],[0.1],[0.2],[0.4]]] l = np.array([2,3])

Convert numpy to tensor

aa = tf.convert_to_tensor(a,dtype=tf.float32) ll = tf.convert_to_tensor(l,dtype=tf.float32)

Define a function to calculate real attention value

def realAttention(attention,length):

batch_size = tf.shape(attention)[0]

max_length = tf.shape(attention)[1]

mask = tf.sequence_mask(length, max_length, tf.float32)

mask = tf.reshape(mask,[-1, max_length, 1])

a = attention * mask

a = a / tf.reduce_sum(a,axis=1,keep_dims=True)

return a

Calculate real attention value

real_attention = realAttention(aa,ll)

Print real attention value

init = tf.global_variables_initializer()

init_local = tf.local_variables_initializer()

with tf.Session() as sess:

sess.run([init, init_local])

print(sess.run(real_attention))

The output is:

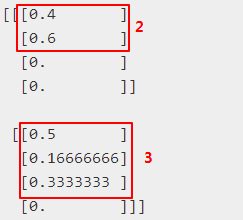

[[[0.4 ] [0.6 ] [0. ] [0. ]] [[0.5 ] [0.16666666] [0.3333333 ] [0. ]]]

From realAttention() function, you will find to calculate real attention value of variable length sequence, the key is to use mask.

Hi, I think the sequence mask should be applied BEFORE the softmax. Correct?

Yes, we should apply the sequence mask before the softmax, this article is ony an example for introducing how to process the variable length attention, we should modify it in our model.