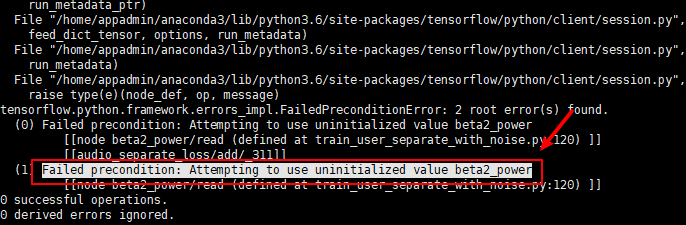

When we are training a tensorflow model, we may get this error: Failed precondition: Attempting to use uninitialized value. In this tutorial, we will introduce you how to fix it.

Look at our example code:

sess.run(tf.global_variables_initializer())

......

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

normal_optimizer = tf.train.AdamOptimizer(FLAGS.lr, name = 'audio_sep_adam')

normal_grads_and_vars = normal_optimizer.compute_gradients(sep_model.loss)

train_normal_op = normal_optimizer.apply_gradients(normal_grads_and_vars, global_step=global_step)

train_op = train_normal_opRun this code, we will get this error.

Why this uninitialized value error occur?

In order to understand the reason, we should know what to do by sess.run(tf.global_variables_initializer())

sess.run(tf.global_variables_initializer()) will initialize all global variables in tensorflow. Here is the detailed introduction.

An Explain to sess.run(tf.global_variables_initializer()) for Beginners – TensorFlow Tutorial

How about normal_optimizer = tf.train.AdamOptimizer(FLAGS.lr, name = ‘audio_sep_adam’)?

tf.train.AdamOptimizer() will also create some variables, we can view these variables by this tutorial:

List All Trainable and Untrainable Variables in TensorFlow – TensorFlow Tutorial

As to example code above, sess.run(tf.global_variables_initializer()) is called before tf.train.AdamOptimizer(), which will make variables created by adam are uninitialized.

How to fix this error?

It is very easy to fix this error, we call sess.run(tf.global_variables_initializer()) after tf.train.AdamOptimizer(). This will make all global variables be initialized.

Here is an example:

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

normal_optimizer = tf.train.AdamOptimizer(FLAGS.lr, name = 'audio_sep_adam')

normal_grads_and_vars = normal_optimizer.compute_gradients(sep_model.loss)

train_normal_op = normal_optimizer.apply_gradients(normal_grads_and_vars, global_step=global_step)

train_op = train_normal_op

......

sess.run(tf.global_variables_initializer())Run this code, we will find this error is fixed.