When we are using pytorch torch.optim.lr_scheduler.CyclicLR(), we may get this error: ValueError: optimizer must support momentum with `cycle_momentum` option enabled. In this tutorial, we will introduce you how to fix it.

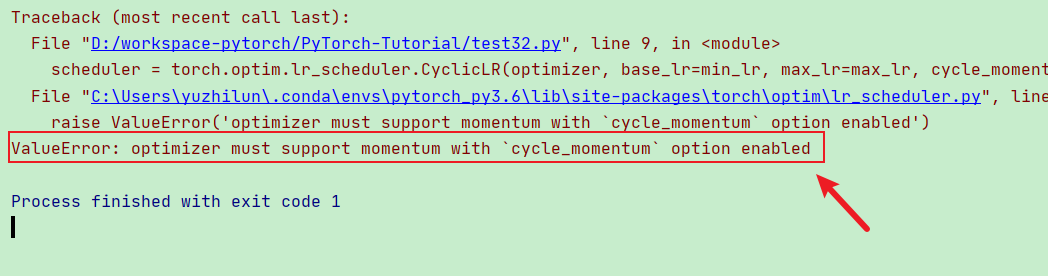

This error looks like:

How to fix this error?

Because we use torch.optim.lr_scheduler.CyclicLR()+torch.optim.AdamW(). However, AdamW optimizer does not contain a momentum. We should set cycle_momentum = False in torch.optim.lr_scheduler.CyclicLR()

For example:

optimizer = torch.optim.AdamW(model, lr=LR, weight_decay = 2e-5, amsgrad = True) max_lr = 1e-3 min_lr = max_lr / 4 scheduler = torch.optim.lr_scheduler.CyclicLR(optimizer, base_lr=min_lr, max_lr=max_lr, cycle_momentum = True, mode='triangular2', step_size_up=17000*6)

Then, we can find this error is fixed.