In this tutorial, we will introduce how to use tensorflow to implement convolution module in conformer.

The structure of convolution module in conformer

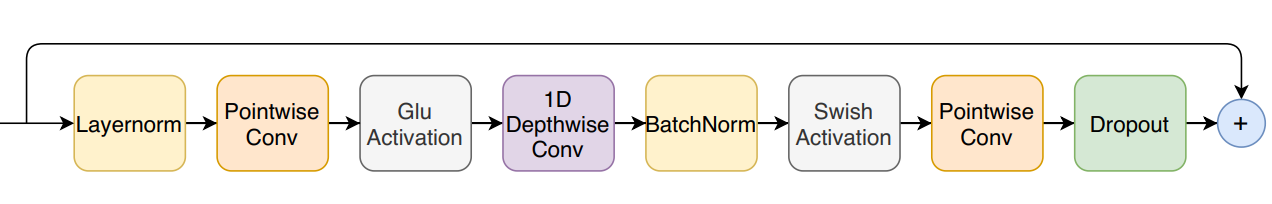

The structure of this convolution module is:

There are 9 steps in this module.

How to implement it using tensorflow?

Here is an example code for it using tensorflow.

class ConvolutionLayer():

def __init__(self, expansion_factor = 2):

self.expansion_factor = expansion_factor

def __call__(self, *args, **kwargs):

return self.forward(*args, **kwargs)

def glu(self, inputs):

mat1, mat2 = tf.split(inputs, 2, axis=-1)

mat2 = tf.nn.sigmoid(mat2)

return tf.math.multiply(mat1, mat2)

def forward(self, inputs, filters = 512, trainable = True, dropout_p = 0.1):

"""

Inputs: inputs

inputs (batch, time, dim): Tensor contains input sequences

Outputs: outputs

outputs (batch, time, dim): Tensor produces by conformer convolution module.

:param inputs:

:return:

"""

self.dropout_p = dropout_p

# 1. LayerNormalization

outputs = tf.contrib.layers.layer_norm(inputs=inputs, begin_norm_axis=-1, begin_params_axis=-1)

# 2.pointwise conv

outputs = tf.layers.conv1d(inputs, filters * self.expansion_factor, kernel_size = 1, strides=1)

# 3.glu

outputs = self.glu(outputs)

# 4. 1D-Depthwise conv

outputs = tf.layers.conv1d(inputs, filters, kernel_size=3, strides=1, padding='same')

# 5. batch norm

outputs = tf.layers.batch_normalization(outputs, axis=-1, training=trainable)

# 6. swish

outputs = tf.nn.swish(outputs)

# 7. pointwise conv

outputs = tf.layers.conv1d(inputs, filters, kernel_size=1, strides=1)

# 8. dropout

outputs = tf.layers.dropout(outputs ,rate = self.dropout_p)

# 9. add

return outputs + inputsIn this code, you can find each step.

Then, we can start to evalute it.

if __name__ == "__main__":

import numpy as np

batch_size, seq_len, dim = 3, 12, 80

inputs = tf.random.uniform((batch_size, seq_len, dim), minval=-10, maxval=10)

conv_layer = ConvolutionLayer()

outputs = conv_layer(inputs, filters = 80)

init = tf.global_variables_initializer()

init_local = tf.local_variables_initializer()

with tf.Session() as sess:

sess.run([init, init_local])

np.set_printoptions(precision=4, suppress=True)

a = sess.run(outputs)

print(a.shape)

print(a)In this code, the inputs is 3* 12 * 80, which means the batch_size = 3, time = 12 and dim = 80.

Run this code, we also will get a tensor with 3* 12* 80

(3, 12, 80) [[[ 3.8787 -6.4512 14.3416 ... -7.5793 -5.3391 2.4872] [-13.6301 -5.2775 2.6721 ... -10.4354 -4.342 -3.813 ] [-19.9332 5.1199 22.6567 ... 4.5053 11.7114 -4.19 ] ... ... [ -4.1416 -8.6751 11.1841 ... -4.1469 -6.7145 -5.4404] [ -7.6805 7.1907 7.1883 ... -5.4993 8.4704 19.3019] [ -4.9997 3.0497 -12.2531 ... 2.2781 -5.6913 -6.9605]]]