Pre-net is an important part of Tacotron, in this tutorial, we will introduce you how to create it using tensorflow.

Pre-net in Tacotron

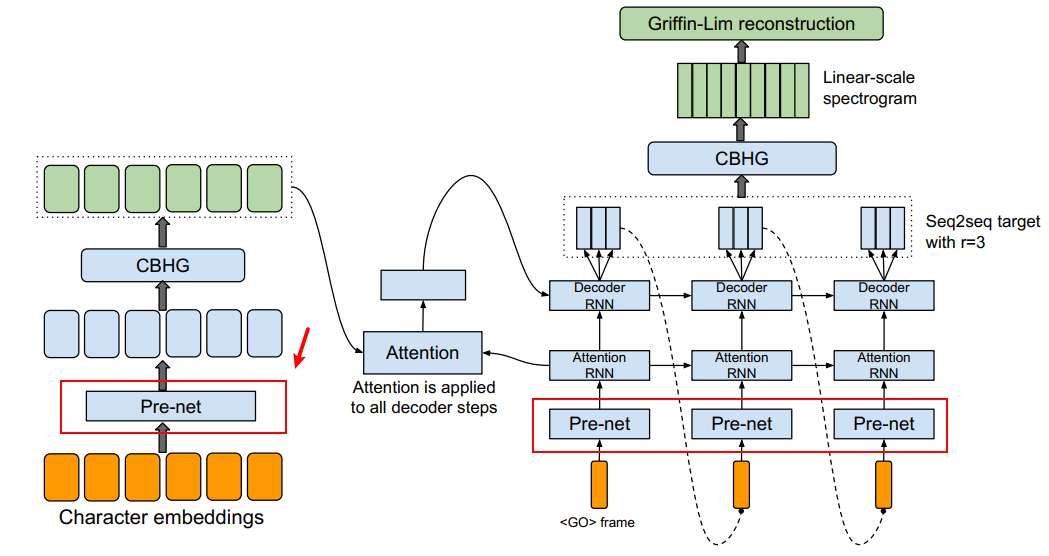

The structure of Tacotron looks like:

We can find Pre-net is the first layer to process inputs.

From paper: TACOTRON: TOWARDS END–TO-END SPEECH SYNTHESIS, we can find:

Pre-net is: FC-256-ReLU->Dropout(0.5) ->FC-128-ReLU->Dropout(0.5)

How to create Pre-net using tensorflow?

We will refer to this code to create.

https://github.com/zuoxiang95/tacotron-1/blob/master/models/modules.py

Here is an Pre-net source code:

def prenet(inputs, drop_rate = 0.5, layer_sizes=[256, 128], scope=None):

x = inputs

with tf.variable_scope(scope or 'prenet'):

for i, size in enumerate(layer_sizes):

dense = tf.layers.dense(x, units=size, activation=tf.nn.relu, name='dense_%d' % (i+1))

x = tf.layers.dropout(dense, rate=drop_rate, name='dropout_%d' % (i+1))

return xWe will evaluate it.

w = tf.Variable(tf.glorot_uniform_initializer()([32, 128]), name="w")

v = prenet(w, scope = 'prenet')

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

x = sess.run(v)

print(x.shape)

print(x)Run this code, we will see:

(32, 128) [[0.10063383 0.00535887 0. ... 0. 0. 0.07058308] [0. 0. 0. ... 0. 0. 0. ] [0. 0.08158886 0. ... 0.04584407 0. 0.04442355] ... [0.0752174 0. 0. ... 0.02113288 0. 0.02786144] [0. 0. 0. ... 0.01715038 0. 0.04262433] [0. 0.03768676 0. ... 0.13155384 0. 0.09612481]]

We should notice: when we test our mode, we should set drop_rate = 0.0. Because weights in tf.layers.dropout() is scaled by 1 / (1 - drop_rate )