In this tutorial, we will introduce how to implement ranger optimizer in pytorch. There are two ways to implement, we will introduce one by one.

Method 1: use python pytorch_ranger package

We should install it.

pip install pytorch_ranger

You can find its source code in here:

https://github.com/mpariente/Ranger-Deep-Learning-Optimizer

We can use ranger optimizer as follows:

import torch

from matplotlib import pyplot as plt

from pytorch_ranger import Ranger

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

LR = 0.01

optimizer = Ranger(model, lr=LR)

print(optimizer)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size= 1, gamma=0.97)

for epoch in range(200):

data_size = 400

for i in range(data_size):

optimizer.zero_grad()

#torch.nn.Parameter(torch.randn(2, 2, requires_grad=True)).mean().backward()

optimizer.step()

scheduler.step(epoch)Run this code, you will see:

Ranger (

Parameter Group 0

N_sma_threshhold: 5

alpha: 0.5

betas: (0.95, 0.999)

eps: 1e-05

k: 6

lr: 0.01

step_counter: 0

weight_decay: 0

)

Method 2:use pytorch-forecasting

Python pytorch-forecasting contains ranger optimizer. It is:

pytorch_forecasting.optim.Ranger(params: Union[Iterable[torch.Tensor], Iterable[dict]], lr: float = 0.001, alpha: float = 0.5, k: int = 6, N_sma_threshhold: int = 5, betas: Tuple[float, float] = (0.95, 0.999), eps: float = 1e-05, weight_decay: float = 0)

We can use it as follows:

import torch

from matplotlib import pyplot as plt

from pytorch_forecasting.optim import Ranger

lr_list = []

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

LR = 0.01

#optimizer = Ranger(model, lr=LR)

optimizer = Ranger(model, lr=LR)

print(optimizer)

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size= 1, gamma=0.97)

for epoch in range(200):

data_size = 400

for i in range(data_size):

optimizer.zero_grad()

#torch.nn.Parameter(torch.randn(2, 2, requires_grad=True)).mean().backward()

optimizer.step()

scheduler.step(epoch)

Run this code, we will see:

Ranger (

Parameter Group 0

N_sma_threshhold: 5

alpha: 0.5

betas: (0.95, 0.999)

eps: 1e-05

k: 6

lr: 0.01

step_counter: 0

weight_decay: 0

)

And get an error:

TypeError: ‘NoneType’ object is not callable

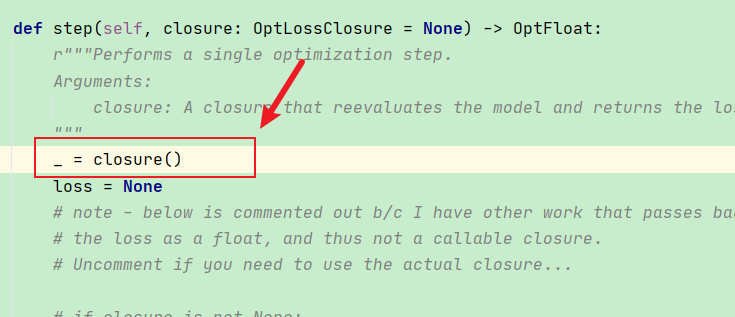

How to fix this NoneType error?

From its source code, we can find:

closure() is NoneType, We can create a closure() function to process loss.

In tutorial: Understand PyTorch optimizer.step() with Examples – PyTorch Tutorial we can find its solution.

for input, target in dataset:

def closure():

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

loss.backward()

return loss

optimizer.step(closure)