Softmax function is widely used in deep learning classification problem. This function may cause underflow and overflow problem. To avoid these problems, we will use an example to implement softmax function.

What is softmax function?

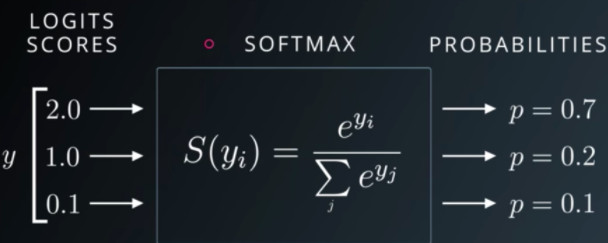

Softmax is defined as:

As to softmax function:

softmax(x) = softmax(x-a)

where a is a scala.

How to implement a softmax without underflow and overflow?

We will use numpy to implement a softmax function, the example code is:

import numpy as np

def softmax(z):

"""Computes softmax function.

z: array of input values.

Returns an array of outputs with the same shape as z."""

# For numerical stability: make the maximum of z's to be 0.

shiftz = z - np.max(z)

exps = np.exp(shiftz)

return exps / np.sum(exps)This softmax code will not cause underflow and overflow problem, you also can implement it by other programming language, such as c, c++ or java.