Swish activation function is proposed in comformer model. In this tutorial, we will introduce how to implement in pytorch.

Implement Swish (SiLU) activation in pytorch

Swish is defined as:

We can create a module to implemnt it.

For example:

class Swish(nn.Module):

def __init__(self):

super(Swish, self).__init__()

def forward(self, inputs: Tensor) -> Tensor:

return inputs * inputs.sigmoid()Morever, we also can use torch.nn.SiLU() in pytorch 2.0

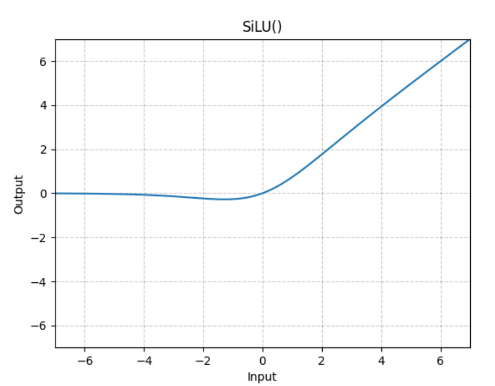

The effect of Swish is:

If you want to implement it in tensorflow, you can read:

Swish (Silu) Activation Function in TensorFlow: An Introduction – TensorFlow Tutorial