L2 regularization can hurt training error and improve your model performance. However, l2 is related to batch size when training. To address this issue, we will discuss this topic in this tutorial.

L2 Regularization

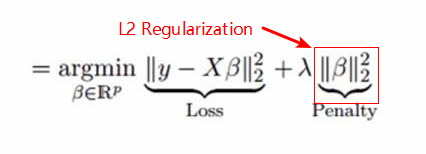

The formula of l2 regularization is defined as:

From the formula we can find the value of l2 regularization is related to all weights in model and is not related to the number of training sample or batch size.

How to implement l2 regularization?

There exsits to kinds of l2 regularization:

Form 1:

l2 = deta * tf.reduce_sum([ tf.nn.l2_loss(n) for n in tf.trainable_variables() if 'bias' not in n.name]) loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=self.scores, labels = self.input_y)) + l2

Form 2:

l2 = deta * tf.reduce_sum([ tf.nn.l2_loss(n) for n in tf.trainable_variables() if 'bias' not in n.name]) loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=self.scores, labels = self.input_y) + l2)

Which one is correct?

Both of them are correct, which are not related to batch size.

However, we have foud some examples in some tensorflow project. form 2 are common used ,while form 1 is not.

For examaple:

So we also recommend to you to form 2.