When we use LLM model to generate a text, we may see a temerature parameter. In this tutorial, we will discuss it.

Look at code below:

The effect of temperature in LLM

When llm generate a output, it may use a softmax function get the probability of the next token. It can be defined as:

Here T is the temperature. It is:

\(T\gt 0\)

To understand the effect of T, we can read this tutorial:

An Explanation of Softmax Function with Hyperparameter – Machine Learning Tutorial

Here:

\(T = \frac{1}{S}\)

As to T:

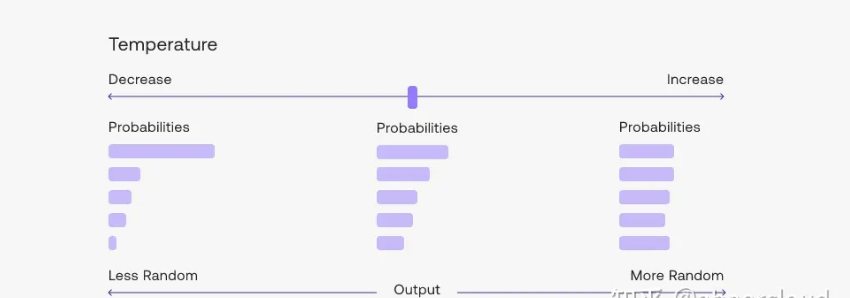

0<T<=1: It will be benefit to big probability, which will decrease the llm output versatility.

T>1: It is benefit to small probability, which will make llm generate more creative outputs.

Here is an illustration.