In this tutorial, we will introduce you how to use python to generate hash audio fingerprinting for audio retrieval and detection. It contains three parts, we will introduce you one by one.

Part 1: Generate hash audio fingerprinting

It is the core of this tutorial, the hash feature of an audio can be generated as follows:

- read a wav audio and get log stft

- compute the peak of an audio

- generate the hash feature based on audio peak

This is the full code, the file name is called file_uti.py

import os

import numpy as np

import librosa

import librosa.display

from matplotlib import pyplot as plt

import hashlib

import pickle

from skimage.feature import peak_local_max

def traverseDir(dir, filetype = ".wav"):

files = []

for entry in os.scandir(dir):

if entry.is_dir():

files_temp = traverseDir(entry.path, filetype)

if files_temp:

files.extend(files_temp)

elif entry.is_file():

if entry.path.endswith(filetype):

files.append(str(entry.path))

return files

def getFilePathInfo(absolute):

dirname = os.path.dirname(absolute)

basename = os.path.basename(absolute)

info = os.path.splitext(basename)

filename = info[0]

extend = info[1]

return dirname, filename, extend

def save_to_file(label_bo, file = 'label.bin'):

with open(file, "wb") as f:

pickle.dump(label_bo, f)

def load_from_file(file = 'label.bin'):

with open(file,"rb") as f:

obj = pickle.load(f)

return obj

def normalise(wave):

wave = (wave - np.min(wave)) / (np.max(wave) - np.min(wave))

return wave

def calculate_stft(file, sr = 8000, n_fft = 512, win_length = 200, hop_length = 80, plot=True):

# read wav file data

if isinstance(file, str):

y, sr = librosa.load(file, sr = sr, mono = True)

else:

y = file # file is wavdata

#y = librosa.effects.trim(y)[0]

# normalise

signal = normalise(y)

# compute and plot STFT spectrogram

D = np.abs(librosa.stft(signal, n_fft=n_fft, window='hann', win_length=win_length, hop_length=hop_length)) + 1e-9

if plot:

plt.figure(figsize=(10, 5))

librosa.display.specshow(librosa.amplitude_to_db(D, ref=np.max), y_axis='linear',

x_axis='time', cmap='gray_r', sr=sr)

plt.show()

return D

# create peak and return fasle,true matrix

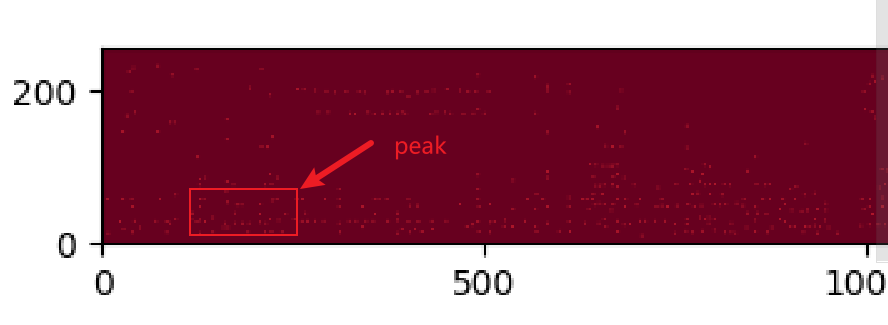

def calculate_contellation_map(D, min_distance=10, threshold_rel=0.05, plot=True):

# detect peaks from STFT and plot contellation map

coordinates = peak_local_max(np.log(D), min_distance=min_distance, threshold_rel=threshold_rel, indices=False)

# feature coordinates

print(type(coordinates))

print(coordinates.shape)

if plot:

plt.figure(figsize=(10, 5))

plt.imshow(coordinates, cmap="RdBu", origin='lower')

plt.show()

return coordinates

# create wav hash

def generate_target_hash(file, params):

# 1.get

min_distance = params['min_distance']

threshold_rel = params["threshold_rel"]

fanout = params["fanout"]

anchor_distance = params["anchor_distance"]

sr = params["sr"]

n_fft = params["n_fft"]

win_length = params["win_length"]

hop_length = params["hop_length"]

plot = params["plot"]

stft = calculate_stft(file, sr, n_fft, win_length, hop_length, plot = plot)

coordinates = calculate_contellation_map(stft, min_distance=min_distance, threshold_rel=threshold_rel, plot = plot)

print(f'Generating Hash {file}...')

# 2. get file name

dirname, filename, extend = getFilePathInfo(file)

target_address = {}

# 3.iter coordinate

contellation = np.where(coordinates.T == True) # a tuple

if len(contellation[0]) < (anchor_distance + 2): # at least two features

return target_address

x = len(contellation[0])

for i in range(x):

anchor_time = contellation[0][i] # time

anchor_frequency = contellation[1][i] # frequency

hash_info = []

for j in range(fanout): #

id = i + j + anchor_distance

if id >= x:

break

time = contellation[0][i + j + anchor_distance] # 5-33

frequency = contellation[1][i + j + anchor_distance]

hash_1 = (frequency, time)

hash_info.append(hash_1)

hash_len = len(hash_info)

for m in range(hash_len-1):

hash_1 = hash_info[m]

frequency = hash_1[0]

time = hash_1[1]

hash_1 = str((anchor_frequency, frequency, time - anchor_time))

hash_2 = hash_info[m+1]

frequency = hash_2[0]

time = hash_2[1]

hash_2 = str((anchor_frequency, frequency, time - anchor_time))

hash_str = hash_1+"_"+hash_2

hash_ = hashlib.sha256(hash_str.encode()).hexdigest()

if filename in target_address:

if hash_ in target_address[filename]:

continue

target_address[filename].append(hash_)

else:

target_address[filename] = [hash_]

return target_address

#target_address:{filename:[]}

def save_target_hash_feature(feature_file, target_address_list):

feature = {}

for target_address in target_address_list:

for filename in target_address:

for h in target_address[filename]:

if h in feature:

feature[h].append(filename)

else:

feature[h] = [filename]

#if not os.path.exists(feature_file):

save_to_file(feature, feature_file)

def count_list_frequency_proportion(list_data):

stat_frequency = {}

stat_proportion = {}

total = len(list_data)

for e in list_data:

if str(e) in stat_frequency:

stat_frequency[str(e)] += 1

else:

stat_frequency[str(e)] = 1

for key, value in stat_frequency.items():

stat_proportion[key] = value / total

return stat_frequency, stat_proportion

def search(query_hash_list, target_hash_dict, top = 3):

#results

results = []

for _hash in query_hash_list:

if _hash in target_hash_dict:

files = target_hash_dict[_hash]

results.extend(files)

# list

freq, proportion = count_list_frequency_proportion(results)

#{'1': 3, '2': 3, '4': 2, '5': 1, '6': 1, '7': 1, '3': 1}

# sort

results = sorted(freq.items(), key=lambda x: x[1], reverse=True)

print(results)Part 2: Generate hash audio fingerprinting and save

Then we can create hash audio fingerprinting of wav files to save

import os

#import IPython.display as ipd

import numpy as np

import librosa

import librosa.display

from matplotlib import pyplot as plt

from skimage.feature import peak_local_max

import pickle

import hashlib

import file_util

# targetDir: save wav file that will be searched

targetDir = 'database_recordings'

params = {}

params["plot"] = True

params["min_distance"] = 4

params["threshold_rel"] = 0.05

params["anchor_distance"] = 5

params["fanout"] = 28

params["sr"] = 8000

params["n_fft"] = 512

params["win_length"] = int(0.025*params["sr"])

params["hop_length"] = int(0.01*params["sr"])

#--------------------------------------------------

targets = file_util.traverseDir(targetDir)

target_address_list = []

for f in targets:

hash_feature = file_util.generate_target_hash(f,params)

target_address_list.append(hash_feature)

#save hash feature

feature_file = "hash_feature.bin"

file_util.save_target_hash_feature(feature_file, target_address_list)

In this example, all wav files are stored in database_recordings directory. We will generate all hash feature of these files and save to hash_feature.bin

Run this code, you will see:

Part 3: Search audio based on hash audio fingerprinting

Here is an example code:

import os

#import IPython.display as ipd

import numpy as np

import librosa

import librosa.display

from matplotlib import pyplot as plt

from skimage.feature import peak_local_max

import pickle

import hashlib

import file_util

queryDir = 'query_recordings'

params = {}

params["plot"] = False

params["min_distance"] = 4

params["threshold_rel"] = 0.05

params["anchor_distance"] = 5

params["fanout"] = 28

params["sr"] = 8000

params["n_fft"] = 512

params["win_length"] = int(0.025*params["sr"])

params["hop_length"] = int(0.01*params["sr"])

#--------------------------------------------------

queries = file_util.traverseDir(queryDir)

feature_file = "hash_feature.bin"

target_hash_dict = file_util.load_from_file(feature_file)

for f in queries[0:1]:

dirname, filename, extend = file_util.getFilePathInfo(f)

hash_feature = file_util.generate_target_hash(f,params)

query_hash_list = hash_feature[filename]

print(type(query_hash_list))

results = file_util.search(query_hash_list, target_hash_dict, top = 3)

Run run this code, we may see:

<class 'numpy.ndarray'>

(257, 1004)

Generating Hash query_recordings\pop.00085-snippet-10-20.wav...

<class 'list'>

[('pop.00085', 11), ('classical.00005', 2), ('classical.00003', 1), ('classical.00007', 1), ('pop.00086', 1), ('classical.00004', 1), ('classical.00001', 1), ('classical.00002', 1)]

Process finished with exit code 0

We can find pop.00085.wav is same to pop.00085-snippet-10-20.wav. 11 hash features are matched.

Moreover, we also can use VAD to remove silence in wav file. Here is the tutorial:

Simple Guide to Use Python webrtcvad to Remove Silence and Noise in an Audio – Python Tutorial