Both of model.named_parameters() and model.parameters() can display all parameters in a pytorch model. What’s the difference between them? In this tutorial, we will discuss this topic.

model.named_parameters() vs model.parameters()

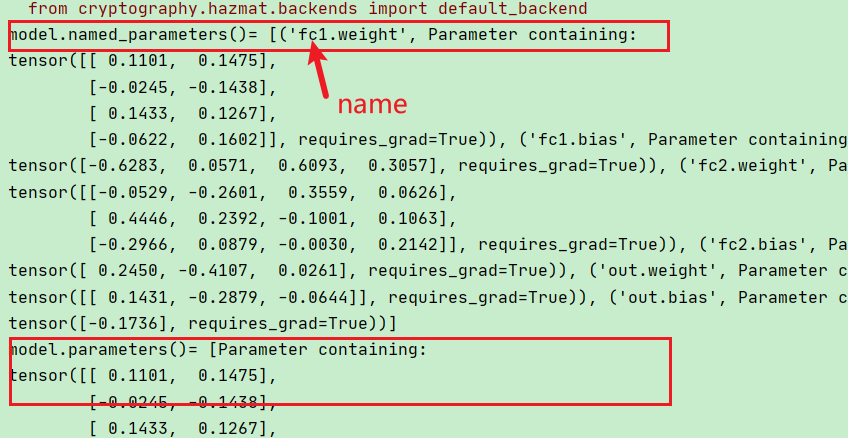

model.named_parameters(): it returns a generateor and can display all parameter names and values (requires_grad = False or True).

Understand PyTorch model.named_parameters() with Examples – PyTorch Tutorial

model.parameters(): it also return a generateor and only will display all parameter values (requires_grad = False or True).

For example:

import torch.nn as nn

from torch.autograd import Variable

import torch.optim as optim

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(2, 4)

self.fc2 = nn.Linear(4, 3)

self.out = nn.Linear(3, 1)

self.out_act = nn.Sigmoid()

def forward(self, inputs):

a1 = self.fc1(inputs)

a2 = self.fc2(a1)

a3 = self.out(a2)

y = self.out_act(a3)

return y

model = Net()

params = list(model.named_parameters())

print("model.named_parameters()=", params)

print("model.parameters()=", list(model.parameters()))Run this code, we will see:

It means we can not filter parameters by model.parameters()

PyTorch Freeze Some Layers or Parameters When Training – PyTorch Tutorial