Cosine Similarity Softmax is an improvement of traditional softmax function. In this tutorial, we will intorduce it for machine learning beginners.

Softmax function

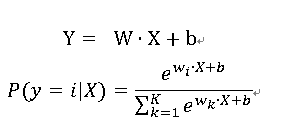

Softmax function is widely used in text classification. As to text classification problem, softmax function can be difined as:

where K is the number of classification.

From the the equation of softmax function: P(y = i|X), we can find:

The value of P is determined by the dot product of vector wi and X.

Cosine Similarity Softmax

Suppose the lenght of W and X is 1, b = 0

Y = W•X

=||w||*||X||*cosθ

=cosθ

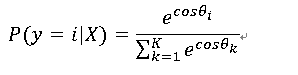

cosine similarity softmax can be:

if we only suppose b= 0, we also can define P(y=i|X),

where cosθi = wi•X/||wi||*||X||

However, cosθ∈[-1,1], in order to improve the performance of cosine similarity softmax, we can update it to:

S is a hyper parameter, you can set the value by your own situation.

S can be 2, 4, 6 or 32, 64