Gated End-to-End Memory Networks is proposed by Fei Liu in 2017 (https://www.aclweb.org/anthology/E17-1001), which is an improvement of End-to-End Memory Networks.

Standard End-to-End Memory Networks like this:

The output uk+1 is:

As this equation, here is an problem: uk and ok contribute equally to uk+1?

If not, how to evaluate this kind of different contribution?

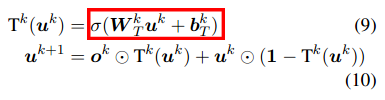

In this paper, author use an sigmoid function to create a gate to control this weight, which likes in LSTM.

The equation is:

We can see, the key idea of this paper is to use uk to create a sigmoid function to control the different weight between ok and uk.

Here is a problem, why not use uk and ok to create a sigmoid function like:

I think it is feasible.

On the other hand, this paper processed two kind of W and b.

The experiment results show Hop-specific is better than Global, which is easy understand and obvious. Because Global W and b will be disturbed easily.