L2 regularization is often use to avoid over-fitting problem in deep learning, in this tutorial, we will discuss some basic features of it for deep learning beginners.

Formula

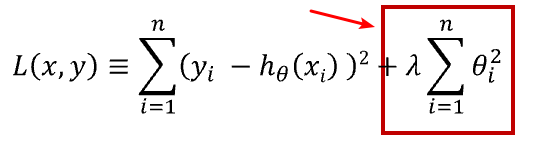

As to a deep learning model, the loss function is often defined as:

final_loss = loss + l2

such as:

Notice:

1. n is the dimension of yi and xi. λ is the penalty term or regularization parameter, we often set it to 0.01 – 0.001.

2. In this formula, λ should be λ/2

How to calculate l2 regularization?

We often train a deep learning mode by a batch of samples, as to loss function, we usually average the loss value between y and ypred. However, as to l2 regularization, we do not need to average it with batch_size.

As to tensorflow, we can use tf.nn.l2_loss() function to calculate l2 regularization. which is:

l2 = sum(all_weights_in_model^2)/2

Meanwhile, if you are using tensorflow, you can read this tutorial to know how to calculate l2 regularization.

Multi-layer Neural Network Implements L2 Regularization in TensorFlow – TensorFLow Tutorial