Long Short-Term Memory Network(LSTM) is one of popular deep learning models, which is used in many fields, such as sentiment analysis, data mining and machine translate.

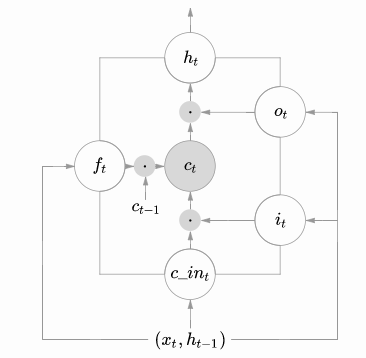

LSTM was firstly proposed by Hochreiter and Schmidhuber in 1997, which is a variant RNN and can solve the vanishing and exploding gradient problem in RNN. It contains three gates: forget gate, input gate and output gate. These gates determine the information to flow in and flow out at the current time step.

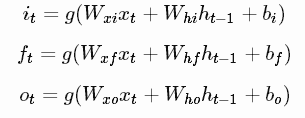

The mathematical representations of the cell are as follows:

Gates:

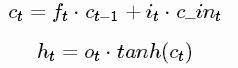

Input transform:

![]()

State upate:

The Structure of LSTM is: