Softmax function is widely used in deep learning, how about its gradient? We will discuss this topic in this tutorial for deep learning beginners.

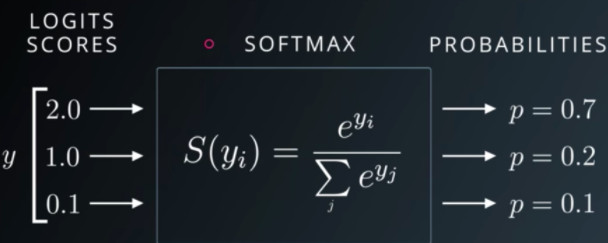

The equation of softmax function

The formula of softmax function is:

where a1+a2+…+an = 1.

The gradient of softmax function

The gradient of softmax function is:

From above, we can find the softmax may cause gradient vanishing problem problem.

For example, if ai ≈ 1 or ai ≈ 0, the gradient of softmax will be 0, the back weight of softmax function will not be updated. If you plan to use soft softmax function, this point you should concern.