TensorFlow tf.nn.sigmoid_cross_entropy_with_logits() is one of functions which calculate cross entropy. In this tutorial, we will introduce some tips on using this function. As a tensorflow beginner, you should notice these tips.

Syntax

tf.nn.sigmoid_cross_entropy_with_logits(

_sentinel=None,

labels=None,

logits=None,

name=None

)Computes sigmoid cross entropy given logits

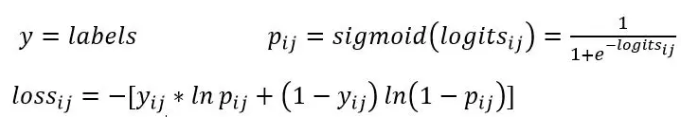

How to compute cross entropy by this function

For example, if labels = y, logits = p

This function will compute sigmoid value of logits then calculate cross entropy with labels.

Here is an example:

Tips:

If you want to calculate sigmoid cross entropy between labels and logits, you must remember logits will be computed by sigmoid function first in this function.

Here is an example to show how to use this function

Create labels and logits

import tensorflow as tf import numpy as np logits = tf.Variable(np.array([[1, 2, 3],[4, 5, 6]]), dtype = tf.float32) labels = tf.Variable(np.array([[1, 0, 0],[0, 1, 0]]), dtype = tf.float32)

In this code, we have created two 2 * 3 shape tensor.

Compute the sigmoid cross entropy

sigmoid_loss = tf.nn.sigmoid_cross_entropy_with_logits(labels = labels, logits = logits)

loss= tf.reduce_mean(sigmoid_loss)

init = tf.global_variables_initializer()

init_local = tf.local_variables_initializer()

with tf.Session() as sess:

sess.run([init, init_local])

print(sess.run([sigmoid_loss]))

print(sess.run([loss]))

The result is:

[array([[0.31326172, 2.126928 , 3.0485873 ],

[4.01815 , 0.00671535, 6.0024757 ]], dtype=float32)]

[2.5860198]

From the result, we find that we should average the sigmoid cross entropy to get final loss.

Meanwhile, if you plant to calculate sigmoid cross entropy between two distribution logits_1 and logits_2, you should sigmoid one of them. Here is an example:

logits_1 = tf.Variable(np.array([[1, 2, 3],[4, 5, 6]]), dtype = tf.float32) logits_2 = tf.Variable(np.array([[-1, 2, 0],[3, 1, -4]]), dtype = tf.float32) logits_2 = tf.sigmoid(logits_2)

Here we compute the sigmoid value of logits_2, which means we will use it as labels.

The sigmoid cross entropy between logits_1 and logits_2 is:

sigmoid_loss = tf.nn.sigmoid_cross_entropy_with_logits(labels = logits_2, logits = logits_1) loss= tf.reduce_mean(sigmoid_loss)

The result value is:

[array([[1.0443203 , 0.36533394, 1.5485873 ],

[0.20785339, 1.3514223 , 5.8945584 ]], dtype=float32)]

[1.7353458]

Moreover, if there are some invalid tensor in logits, you may need use mask for it. In order to calculate sigmoid cross-entropy in tensorflow, you can read this tutorial:

Implement Sigmoid Cross-entropy Loss with Masking in TensorFlow – TensorFlow Tutorial