In this tutorial, we will use some examples to show you how to understand and use torch.nn.Conv1d() function.

torch.nn.Conv1d()

It is defined as:

torch.nn.Conv1d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

It will appliy a 1D convolution over an input.

Input and output

The shape of torch.nn.Conv1d() input.

The input shape should be: (N, Cin, Lin) or (Cin, Lin), (N, Cin, Lin) are common used.

Here:

N = batch size, for example 32 or 64

Cin = it denotes a number of channels

Lin = it is a length of signal sequence

The output of torch.nn.Conv1d().

The output shape of torch.nn.Conv1d() is: (N, Cout, Lout) or (Cout, Lout)

We should notice:

Cout is given in torch.nn.Conv1d() by parameter out_channels, which means Cout == out_channels.

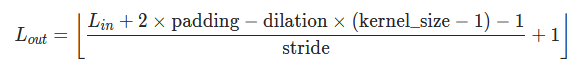

Lout is computed based on Lin, padding et al.

Important parameters

There are some important parameters in torch.nn.Conv1d(), they are:

- in_channels (int) – Number of channels in the input image, it is equal to Cin

- out_channels (int) – Number of channels produced by the convolution, it is equal to Cout

- kernel_size (int or tuple) – Size of the convolving kernel

- stride (int or tuple, optional) – Stride of the convolution. Default: 1

- padding (int, tuple or str, optional) – Padding added to both sides of the input. Default: 0

- padding_mode (string, optional) – ‘zeros’, ‘reflect’, ‘replicate’ or ‘circular’. Default: ‘zeros’

- dilation (int or tuple, optional) – Spacing between kernel elements. Default: 1

- groups (int, optional) – Number of blocked connections from input channels to output channels. Default: 1

- bias (bool, optional) – If True, adds a learnable bias to the output. Default: True

How to use torch.nn.Conv1d()?

We will use an example to show you how to use.

For example:

import torch N = 40 C_in = 40 L_in = 100 inputs = torch.rand([N, C_in, L_in]) padding = 3 kernel_size = 3 stride = 2 C_out = 10 x = torch.nn.Conv1d(C_in, C_out, kernel_size, stride=stride, padding=padding) y = x(inputs) print(y) print(y.shape)

Run this code, we will see:

tensor([[[-0.0850, 0.3896, 0.7539, ..., 0.4054, 0.3753, 0.2802],

[ 0.0181, -0.0184, -0.0605, ..., 0.0114, -0.0016, -0.0268],

[-0.0570, -0.4591, -0.3195, ..., -0.2958, -0.1871, 0.0635],

...,

[ 0.0554, 0.1234, -0.0150, ..., 0.0763, -0.3085, -0.2996],

[-0.0516, 0.2781, 0.3457, ..., 0.2195, 0.1143, -0.0742],

[ 0.0281, -0.0804, -0.3606, ..., -0.3509, -0.2694, -0.0084]]],

grad_fn=<SqueezeBackward1>)

torch.Size([40, 10, 52])

y is the output, the shape of it is 40*10*52

Here 40 is the batch size, 10 is the Cout that we pass into torch.nn.Conv1d(), 52 is the Lout, which is computed based on padding, stride et al.