In this tutorial, we will introduce how to use torch.nn.Conv2d() with some examples. This function is widely used in CNN networks.

torch.nn.Conv2d()

It is defined as:

CLASS torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None)

It applies a 2D convolution over an input signal composed of several input planes.

We should notice:

The torch.nn.Conv2d() input = (N, C_in, H, W), which means in_channels = C_in

Its output = (N, C_out, H_out, W_out), here C_out = out_channels

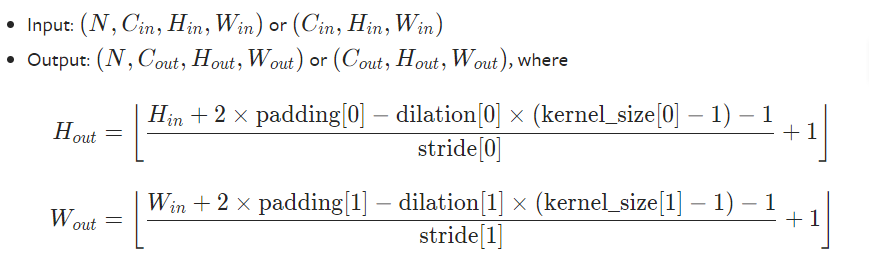

How about H_out and W_out?

They are determined by kernel_size, stride and padding etc al.

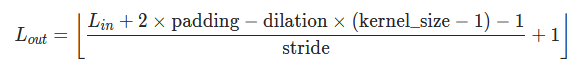

It is similar to torch.nn.Cov1d(). Here L_out is computed as:

Understand torch.nn.Conv1d() with Examples – PyTorch Tutorial

Parameter in torch.nn.Conv2d()

There are some important parameters, they are:

- in_channels (int) – Number of channels in the input image, in_channels = C_in

- out_channels (int) – Number of channels produced by the convolution, out_channels = C_out

- kernel_size (int or tuple) – Size of the convolving kernel, if kernel_size = int, it will be converted to [int, int]

- stride (int or tuple, optional) – Stride of the convolution. Default: 1, it is same to kernel_size when it is an integer.

- padding (int, tuple or str, optional) – Padding added to all four sides of the input. Default: 0

- padding_mode (string, optional) – ‘zeros’, ‘reflect’, ‘replicate’ or ‘circular’. Default: ‘zeros’

- dilation (int or tuple, optional) – Spacing between kernel elements. Default: 1

- groups (int, optional) – Number of blocked connections from input channels to output channels. Default: 1

- bias (bool, optional) – If True, adds a learnable bias to the output. Default: True

Here we will use some examples to show you how to use it.

import torch N = 40 C_in = 40 H_in = 32 W_in = 32 inputs = torch.rand([N, C_in, H_in, W_in]) padding = 3 kernel_size = 3 stride = 2 C_out = 100 x = torch.nn.Conv2d(C_in, C_out, kernel_size, stride=stride, padding=padding) y = x(inputs) print(y) print(y.shape)

Run this code, we will see:

tensor([[[[-1.1969e-02, -1.1969e-02, -1.1969e-02, ..., -1.1969e-02,

-1.1969e-02, -1.1969e-02],

[-1.1969e-02, -1.3632e-01, -1.5025e-01, ..., -2.5144e-01,

-4.2856e-01, -1.6037e-01],

[-1.1969e-02, -2.6628e-02, -1.9788e-01, ..., -1.6797e-01,

-1.1844e-01, -1.4622e-01],

...,

[ 9.3627e-03, -8.4089e-02, -2.2033e-01, ..., -2.7749e-01,

-1.0250e-01, 6.0844e-02],

[ 9.3627e-03, 1.0846e-01, -1.6361e-01, ..., -3.2682e-01,

-2.9164e-01, 9.7849e-02],

[ 9.3627e-03, -2.3735e-01, -1.9463e-01, ..., -2.4621e-01,

-1.7032e-01, 5.3444e-02]]]], grad_fn=<MkldnnConvolutionBackward>)

torch.Size([40, 100, 18, 18])

Because N = 40, C_out = 100 in this example, we will get a tensor with the shape is 40*100 * 18 *18.

H_out and W_out is computed based on padding and kernel_size et al.