Xavier initialized method contains two types: uniform and normal. In pytorch, they are:

- uniform: torch.nn.init.xavier_uniform_()

- normal: torch.nn.init.xavier_normal_()

They will initialize pytorch tensors with different ways.

Notice: if you are using tensorflow, you also can read:

Initialize TensorFlow Weights Using Xavier Initialization : A Beginner Guide – TensorFlow Tutorial

torch.nn.init.xavier_uniform_()

It is defined as:

torch.nn.init.xavier_uniform_(tensor, gain=1.0)

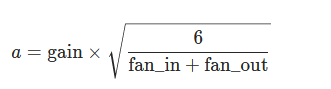

This function will initialize tensors that have values sampled from \(U(−a,a)\).

Where

gain can be computed by torch.nn.init.calculate_gain()

fan_in and fan_out is the input and output dimension.

torch.nn.init.xavier_normal_()

This function is defined as:

torch.nn.init.xavier_normal_(tensor, gain=1.0)

Similar to torch.nn.init.xavier_uniform_(), this function will initialize tensors that have values sampled from \(N(0,std^2)\).

Where

How to use torch.nn.init.xavier_uniform_() and torch.nn.init.xavier_normal_()?

It is easy to use them, here is an example:

import torch

in_dim = 100

out_dim = 50

linear_layer = torch.nn.Linear(in_dim, out_dim, bias=True)

print(linear_layer.weight)

torch.nn.init.xavier_uniform_(

linear_layer.weight,

gain=torch.nn.init.calculate_gain("linear"))

print(linear_layer.weight)

Run this code, we will see:

Parameter containing:

tensor([[-0.0030, -0.0031, 0.0433, ..., -0.0645, 0.0269, 0.0951],

[-0.0520, 0.0277, -0.0528, ..., -0.0348, 0.0114, -0.0755],

[ 0.0827, 0.0281, -0.0810, ..., -0.0709, 0.0492, -0.0836],

...,

[-0.0325, -0.0521, -0.0286, ..., 0.0039, 0.0762, 0.0317],

[ 0.0968, 0.0857, -0.0362, ..., 0.0049, 0.0345, 0.0693],

[-0.0134, 0.0613, -0.0942, ..., 0.0269, 0.0531, 0.0927]],

requires_grad=True)

Parameter containing:

tensor([[-0.0781, 0.0376, 0.0442, ..., 0.1334, 0.1347, 0.0445],

[ 0.0806, -0.0572, -0.0770, ..., -0.1106, -0.0826, -0.1175],

[-0.0429, 0.0161, 0.0430, ..., -0.1173, 0.1779, 0.0881],

...,

[ 0.1457, 0.1077, -0.0646, ..., -0.1134, 0.1504, 0.0044],

[ 0.0993, 0.0520, 0.0589, ..., 0.1021, 0.0407, -0.1307],

[-0.1907, 0.0018, 0.0224, ..., 0.1684, 0.0456, 0.1235]],

requires_grad=True)

We can find that we use torch.nn.init.xavier_uniform_() to change the value of weight in torch.nn.linear().