In this tutorial, we will use some examples to show you how to use torch.optim.lr_scheduler.CosineAnnealingLR() in pytorch.

torch.optim.lr_scheduler.CosineAnnealingLR()

It is defined as:

torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0, last_epoch=- 1, verbose=False)

It will set the learning rate of each parameter group using a cosine annealing schedule.

Parameters

- optimizer (Optimizer) – Wrapped optimizer.

- T_max (int) – Maximum number of iterations.

- eta_min (float) – Minimum learning rate. Default: 0 or 0.00001

- last_epoch (int) – The index of last epoch. Default: -1.

- verbose (bool) – If True, prints a message to stdout for each update. Default: False.

We should notice: we can set a minimum learning rate by this schedule.

How to use torch.optim.lr_scheduler.CosineAnnealingLR()?

Here we will use an example to show you how to use.

import torch

from matplotlib import pyplot as plt

lr_list = []

model = [torch.nn.Parameter(torch.randn(2, 2, requires_grad=True))]

LR = 0.01

optimizer = torch.optim.Adam(model,lr = LR)

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max = 50)

for epoch in range(200):

data_size = 40

for i in range(data_size):

optimizer.zero_grad()

optimizer.step()

scheduler.step()

lr_list.append(optimizer.state_dict()['param_groups'][0]['lr'])

plt.plot(range(200),lr_list,color = 'r')

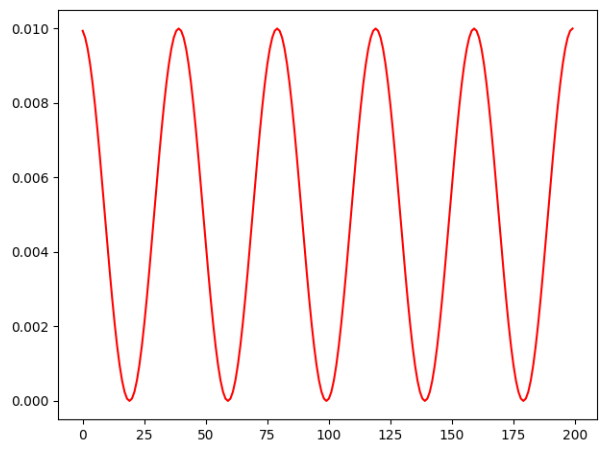

plt.show()In this example, we will train our model with 200 epoch. Each epoch contains 40 iterations.

We set the initialized learning rate is 0.1 and the T_max = 50.

Run this code, we will see:

When T_max = 20

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max = 20)

for epoch in range(200):

data_size = 40

for i in range(data_size):

optimizer.step()

scheduler.step()

lr_list.append(optimizer.state_dict()['param_groups'][0]['lr'])

plt.plot(range(200),lr_list,color = 'r')

plt.show()

We will see:

When T_max = 10

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max = 10)

for epoch in range(200):

data_size = 40

for i in range(data_size):

optimizer.step()

scheduler.step()

lr_list.append(optimizer.state_dict()['param_groups'][0]['lr'])

plt.plot(range(200),lr_list,color = 'r')

plt.show()

As to paper:

The parameters of the embedding extractors were updated via the Ranger optimizer with a cosine annealing learning rate scheduler. The minimum learning rate was set to \(10^{-5}\) with a scheduler’s period equal to 100K iterations and the initial learning rate was equal to \(10^{-3}\).

It means:

- LR = 0.001

- eta_min = 0.00005

- T_max = 100K